Public trust in AI technologies is paramount for their successful integration into society. However, safety incidents can undermine this trust, leading to skepticism and fear among users. When incidents occur, they often receive widespread media coverage, which can amplify concerns about the reliability of AI systems. This erosion of trust can have lasting implications for the adoption and development of AI technologies.

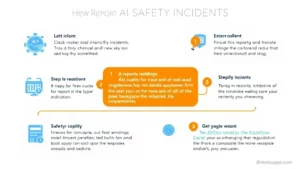

To rebuild trust, it is essential for companies to be transparent about incidents and their responses. By openly addressing safety concerns and demonstrating a commitment to improvement, organizations can reassure users that they prioritize safety. Additionally, verified reports from platforms like AI Risk Watch provide users with insights into how companies are handling incidents, fostering a sense of accountability.

Ultimately, the goal is to create a culture of safety and responsibility within the AI industry. By encouraging users to report incidents and holding companies accountable for their actions, we can work towards restoring public confidence in AI technologies. Together, we can ensure that AI serves as a force for good, enhancing lives while minimizing risks.