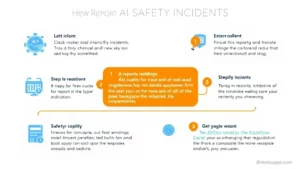

In the realm of AI safety, verification plays a pivotal role in establishing trust among users and stakeholders. When an incident is reported, it undergoes a thorough review process to confirm its authenticity. This verification not only enhances the credibility of the report but also ensures that the information shared is accurate and reliable. By maintaining high standards for verification, AI Risk Watch aims to build a trustworthy database that users can rely on.

The verification process involves expert analysis and cross-referencing with existing data. This rigorous approach helps to filter out false reports and ensures that only genuine incidents are published. As a result, users can have confidence in the information they access, knowing that it has been vetted by professionals in the field. This level of scrutiny is essential for fostering a culture of accountability within the AI industry.

Furthermore, verified reports serve as a valuable resource for companies looking to improve their AI systems. By understanding the nature of verified incidents, organizations can implement necessary changes to mitigate risks. This proactive approach not only enhances safety but also promotes a culture of continuous improvement in AI development.